Radiologists recently developed artificial intelligence models capable of differentiating optimal from suboptimal chest radiographs.

What’s more, the models can also offer feedback on why a radiograph has been deemed suboptimal at the front end of radiographic equipment, which could initiate immediate repeat acquisitions at the point of care when required.

The AI model’s development, testing and validation were detailed recently in Academic Radiology. Radiologists used an AI tool-building platform to create their model, which affords clinicians the opportunity to develop AI models without any prior training in data sciences or computer programming. Experts involved in the development phase suggested the software could help reduce inter-radiologist variability.

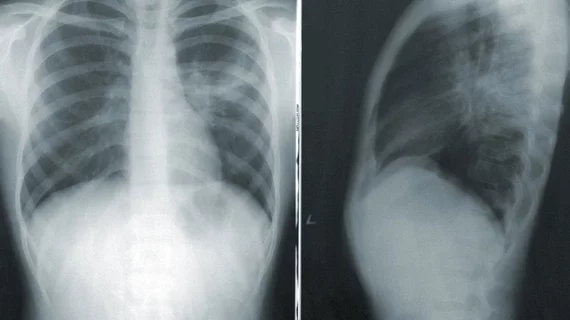

“Higher quality images could result in more consistent and reliable interpretations from projection radiography,” corresponding author of the new paper Mannudeep K. Kalra, MD, with the Department of Radiology at Massachusetts General Hospital and Harvard Medical School, and colleagues indicated. “However, suboptimal quality CXR are extremely common and multifactorial, including those related to radiographers, radiographic equipment, as well as patient morphology and condition.”

The model’s development involved 3,278 chest radiographs from five different sites. A chest radiologist reviewed the images and categorized the reasons for their suboptimality. The de-identified images were then uploaded into an AI server application for training and testing purposes. Model performance was measured based on its area under the curve (AUC) for differentiating between optimal and suboptimal images.

Reasons for suboptimality were categorized as either missing anatomy, obscured thoracic anatomy, inadequate exposure, low lung volume or patient rotation. For accuracy in each category, AUCs ranged from .87 to .94.

Model performance was consistent across age groups, sex and numerous radiographic projections.

Of note, the categorization of suboptimality is not time consuming; experts report that this process takes less than a second per radiograph per category to classify an image as optimal or suboptimal. The team suggested that not only does this expedite the repeat process, but it could also likely do the same for manual audits, which are cumbersome and time consuming.

“An automated process using the trained AI models can help track such information in near time and provide targeted, large-scale feedback to the technologists and the department on specific suboptimal causes,” the group explained, adding that in the long-term this feedback could reduce repeat rates, saving time, money and unnecessary radiation exposures.

The study abstract is available here.