AI 'not safe' to be implemented as a solo reader for breast cancer screening exams

Despite the great progress that has been made toward the clinical implementation of artificial intelligence, new data caution against trusting the technology as a single reader in certain screening settings.

The analysis details efforts to deploy a commercially available AI algorithm as a “silent reader” in a double reading breast screening program in the United Kingdom. After their experience using it in this capacity, experts from 1 of 14 sites where the algorithm was implemented suggested the algorithm is “not currently of sufficient accuracy” to be considered for use in the NHS Breast Cancer Screening Program.

They shared their findings this week in Clinical Radiology.

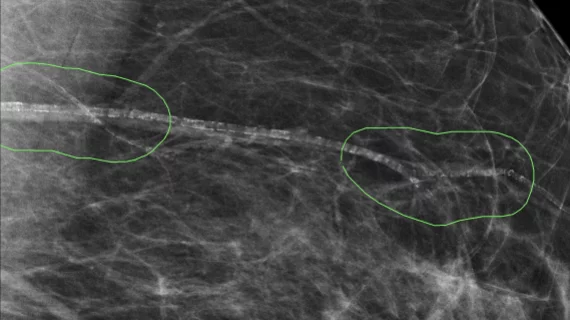

In order to gather data without impacting workflows, the algorithm was used as a “third reader” for nearly 10,000 breast cancer screening mammograms. The team recorded all cases flagged by AI but not radiologists (positive discordant) and compared them to the cases the human readers flagged but the algorithm did not (negative discordant).

A total of 1,135 positive discordant cases were identified. Of those, one patient was recalled for additional imaging but was not diagnosed with cancer. There were 139 negative discordant cases reviewed, revealing eight cancers that were flagged by radiologists but not AI. During its deployment, AI did not detect any additional cancers missed by the radiologists.

The algorithm had higher recall rates compared to physicians. This is likely due to it not being trained to evaluate prior imaging the way radiologists are trained. Although some algorithms have been trained to review previous mammograms and could improve the recall rates observed in this study, the overall performance still warrants caution when considering using AI as a solo reader.

“The high rate of abnormal interpretation by AI, if not monitored by human readers, would create a heavy workload for the assessment clinics, unsustainable for U.K. breast screening units,” Shama Puri, MD, a consultant radiologist with University Hospital of Derby and Burton NHS Foundation Trust, and colleagues noted. “When monitored by human readers, it will lead to a significant increase in the number of consensus/arbitrations required to be done by the human readers. This carries the risk of unnecessary increase in the recall rates, particularly when arbitration/consensus is led by less experienced screen readers.”

In its current form, the group signaled that the algorithm is “not safe” to use as a standalone reader, though they suggested that some refinement could improve its utility.

Learn more about the findings here.