New algorithm models radiologists' eye movements to interpret chest X-rays

Researchers have developed an algorithm that reads chest radiographs in the same way a radiologist would.

What’s more, the algorithm has an edge over standard black box-style artificial intelligence applications because providers are able to see how it reaches conclusions. Essentially, the AI shows its work.

Experts with the University of Arkansas, where the algorithm was developed, detailed its performance recently in the journal Artificial Intelligence in Medicine.

“When people understand the reasoning process and limitations behind AI decisions, they are more likely to trust and embrace the technology,” said Ngan Le, PhD, a University of Arkansas assistant professor of computer science and computer engineering.

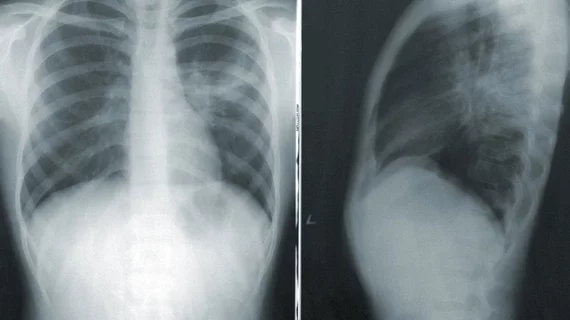

ItpCtrl-AI—short for interpretable and controllable artificial intelligence—was designed to mimic the way a radiologist interprets X-rays. This was done by creating heat maps based on radiologists’ gaze, how it shifts and how long it focuses on certain areas of an image.

“By emulating the eye gaze patterns of radiologists, our framework initially determines the focal areas and assesses the significance of each pixel within those regions,” Le and colleagues explained. “As a result, the model generates an attention heatmap representing radiologists’ attention, which is then used to extract attended visual information to diagnose the findings.”

The heatmaps guide the algorithm toward areas of interest on which radiologists would typically focus. This sort of directional input is controllable and can be adjusted as needed. It also helps users understand how it makes its diagnostic decisions—something many experts, Le included, have said is necessary to bolster confidence in AI reliability.

“If an AI medical assistant system diagnoses a condition, doctors need to understand why it made that decision to ensure it is reliable and aligns with medical expertise,” Le said. “If we don’t know how a system is making decisions, it’s challenging to ensure it is fair, unbiased or aligned with societal values.”

In clinical testing thus far, the algorithm has ably detected abnormalities on chest X-rays. Once the team’s findings are validated, they hope to make their dataset (Diagnosed-Gaze++), models and source code publicly available.

Learn more about their work here.