AI reads digital pathology slides to help improve cancer outcomes

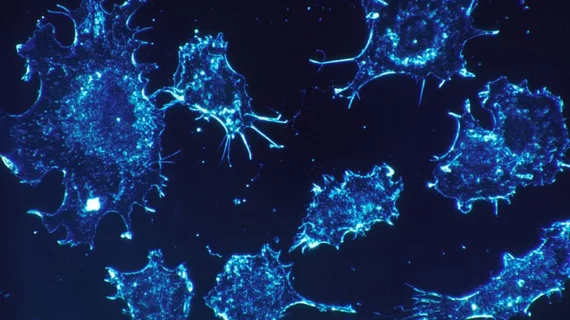

Researchers from UT Southwestern Medical Center in Dallas have developed an AI-based software to analyze cancer cells from digitized pathology slides, potentially improving cancer diagnoses.

The tool, detailed in an EBioMedicine study published last month, can sift through the multitudes of cells in a tissue sample and identify tumors’ growth patterns, along with other highly useful information for predicting health outcomes.

"As there are usually millions of cells in a tissue sample, a pathologist can only analyze so many slides in a day,” Guanghua "Andy" Xiao, PhD, professor of population and data sciences at UT Southwestern, said in a statement. “To make a diagnosis, pathologists usually only examine several 'representative' regions in detail, rather than the whole slide. However, some important details could be missed by this approach," Xiao added.

Another major hurdle to studying how tumors interact with their environment on a small scale is the human brain’s inability to detect subtle patterns and quantify cellular spatial distribution, Xiao explained.

The algorithm, named ConvPath, can bypass such obstacles to classify cell types from lung cancer pathology images. Essentially, the approach creates a map of tissue samples which reveals the spatial distribution and interaction of tumor cells with other cells important to cancer’s growth.

Knowing how cancer cells move or spread is central to understanding a person’s immune response, which could ultimately help physicians tailor treatment plans and select the proper immunotherapy.

The time saved using AI to read slides is perhaps the most important aspect of the research.

"It is time-consuming and difficult for pathologists to locate very small tumor regions in tissue images, so this could greatly reduce the time that pathologists need to spend on each image," Xiao concluded.