AI helps radiologists interpret noncontrast CT scans

A new deep learning model designed to help radiologists read noncontrast CT exams saved time and improved accuracy, according to new findings published in European Radiology. [1]

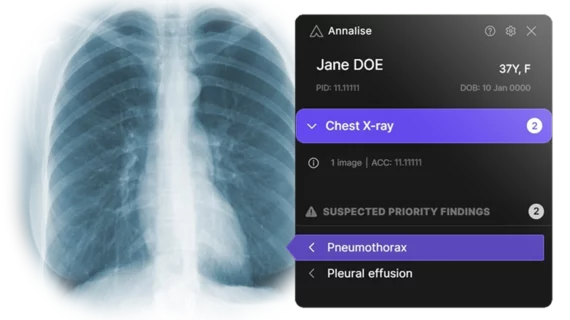

The study's authors focused on 32 radiologists who evaluated 2,848 non-contrast CT brain scans with and without the assistance of advanced artificial intelligence (AI) technology developed by annalise.ai. Radiologists assessed reports and diagnosed patients without the aid of the Annalise Enterprise CTB system, then later the same radiologists conducted similar readings with the assistance of the AI technology.

Non-contrast CT brain scans are widely used for the detection of intracranial pathology, but their interpretation can be subject to errors. The study aimed to see how useful AI technology can be in providing a second set of eyes, to measure how well the system can improve the diagnostic accuracy of trained radiologists.

The deep learning model being examined was trained using 212,484 scans drawn from a private radiology group in Australia. Overall, the AI was successful at improving a radiologist's diagnosis in 91 findings, and reading time was also reduced. Overall, the accuracy of radiology readings improved when radiologists were assisted by the AI technology. However, the researchers noted it’s the specifics that make these findings especially interesting.

“Model benefits were most pronounced when aiding radiologists in the detection of subtle findings,” wrote first author Quinlan Buchlak, MD, with the University of Notre Dame Australia in Australia, and colleagues. “Model performance for ‘watershed infarct,’ with an AUC of 0.92, indicated that although this finding proved difficult for radiologists to detect, subtle abnormalities were generally present on the CT scan that allowed detection by the model. The AUC for augmented readers was 0.68, while unaugmented readers demonstrated an average AUC of 0.57. The considerable improvement of the radiologists in detecting these infarcts when assisted by the model suggests that the findings on these studies were visible to the human eye even though they were often missed in the unassisted arm of the study.”

The authors concluded that more research is still needed to fully understand the benefits of the technology.

Annalise.ai helped fund this analysis.