Explainable AI model accurately auto-labels chest X-rays from open access datasets

Using an explainable artificial intelligence (AI) model, researchers were recently able to accomplish highly accurate labeling on large datasets of publicly available chest radiographs.

Researchers trained, tested and validated the model on more than 400,000 chest X-ray (CXR) images from over 115,000 patients and found that the model could automatically label a subset of data with accuracy equal to or near that of seven radiologists. Experts involved in the model’s development cited the promise of their study’s results, suggesting that a model that can achieve accuracy in line with that of radiologists when labeling open-access datasets could be a key factor to overcoming limitations of artificial intelligence implementation.

“Accurate, efficient annotation of large medical imaging datasets is an important limitation in the training, and hence widespread implementation, of AI models in healthcare,” corresponding author Synho Do, PhD, from the Department of Radiology at Massachusetts General Brigham and Harvard Medical School, and co-authors explained. “To date, however, there have been few attempts described in the literature to automate the labeling of such large, open-access databases.”

The experts disclosed their work highlighted somewhat of a lack of reliability in the labeling of public datasets, explaining that some are not accurate or clean, or that they have not been validated using “platinum level” reference standards. This limitation inhibits the implementation of AI models in real world, clinical scenarios, the authors suggested.

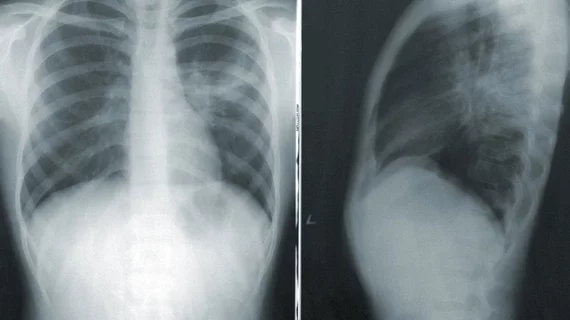

For this study, experts developed an explainable AI model for standardized, automated labeling based on a previously validated xAI model. They used a model-derived-atlas based approach, which allows users to specify a threshold for a desired level of accuracy (the probability-of-similarity, pSim metric). Using three large open-source public datasets (CheXpert, MIMIC, and NIH), researchers tested the model’s ability to accurately label five different pathologies on chest radiographs — cardiomegaly, pleural effusion, pulmonary edema, pneumonia and atelectasis. The annotations were compared to that of seven expert radiologists.

When auto-labeling cardiomegaly and pleural effusion, the model achieved the highest pSim values, with accuracy in line with the seven radiologists. In contrast, lower confidence in label accuracy was noted for pneumonia and pulmonary edema, which are historically more subjective interpretations.

One of the most noteworthy aspects of the model, the experts suggested, is its explainability and the fact that pSim metrics provide feedback on the system’s performance based on the pre-selected level of desired accuracy. This enables researchers to fine-tune the model as needed.

“Employing pSim values helps quantify which clinical output labels of the AI model are most reliably annotated and which need to be improved, making it possible to measure system robustness,” the authors wrote. “Our system is “smart,” in that it can access its 'memory' of examination clinical output labels present in the training set, and quantitatively estimate their similarity to clinical output labels in the new, external examination data.”

The authors acknowledged that a limitation of any AI research is the quality of the original training data, which varies in open-access datasets. They believe that use of the pSim metric for auto-labeling could be beneficial in that scenario because it requires less labeled data to build an accurate model.

The full study’s text can be found in Nature Communications.[1]

Related Artificial Intelligence Content:

Algorithm performs at expert level when distinguishing between benign and malignant ovarian tumors

Lack of diverse datasets in AI research puts patients at risk, experts suggest

Researchers cite safety concerns after uncovering 'harmful behavior' of fracture-detecting AI model

AI predicts COVID prognosis at near-expert level using CT scoring system

Reference: