Natural language processing generates CXR captions comparable to those from radiologists

The emergence of natural language processing (NLP) technology has the potential to advance AI in the field of radiology, but many have questioned whether the reliability of NLP in reporting can compare to that of human radiologists.

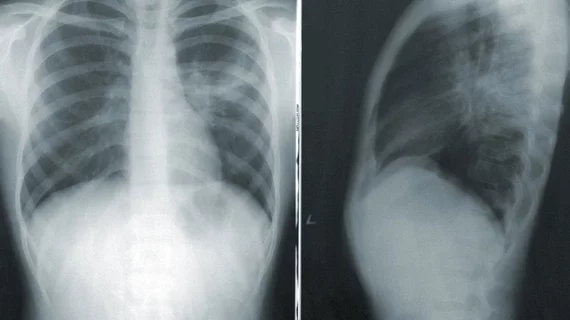

Although numerous AI algorithms have proven valuable in helping radiologists to identify pulmonary abnormalities on chest imaging, human intervention is still required to report these findings. Convolutional neural networks (CNNs) used to train algorithms must be supervised by radiologists for the purpose of annotation, meaning that AI still cannot fully operate on its own; even if it can identify abnormal findings, it cannot report yet report on them. But recent developments in NLP technology have improved its ability to recognize semantics and context, making it more likely that NLP could generate coherent medical reports without radiologist assistance.

These developments are promising, but are they effective?

That’s exactly what a group of experts from Shanghai Jiao Tong University School of Medicine in China sought to understand with their latest research.

“Since most previous studies on CXR interpretation were retrospective tests on selected public data sets, a prospective study in a clinical practice setting is needed to evaluate AI-assisted CXR interpretation,” the group explained. “Therefore, we applied the BERT model to extract language entities and associations from unstructured radiology reports to train CNNs and generated free-text descriptive captions using NLP.”

The new JAMA paper detailing the analysis was co-authored by Yaping Zhang, MD, PhD, Mingqian Liu, MSc and Lu Zhang, MD. The team's analysis included a training dataset of nearly 75,000 chest radiographs labeled with NLP for 23 abnormal findings, in addition to a retrospective dataset and a prospective dataset of more than 5,000 participants.

For the study, radiology residents drafted reports based on randomly assigned captions from three caption generation models: a normal template, NLP-generated captions and rule-based captions. Radiologists, who were blinded to the original captions, finalized the reports. Experts used these to compare accuracy and reporting times.

NLP reports achieved AUCs ranging from .84 to .87 for each dataset. NLP-generated caption reporting time was recorded as 283 seconds, which, the experts note was significantly shorter than the normal template (347 seconds) and rule-based model (296 seconds) reporting times. The NLP-generated CXR captions also showed good consistency with radiologists.

The authors suggested that these findings indicate that NLP can be used to generate CXR captions and might even make the process more efficient, though additional research on a broader dataset is necessary.

The study is available here.