New study highlights the need to include more challenging datasets in AI training

A recent analysis of an already CE-marked, artificial intelligence-based system for identifying pneumothorax (PTX) on chest radiographs revealed a weak point in what has largely been considered a reliable support tool.

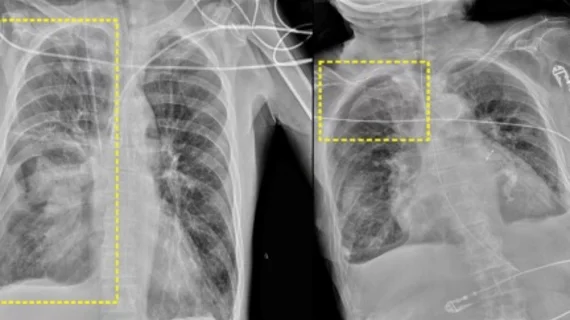

Although the tool’s accuracy for detecting PTX was in line with that of human readers, its performance suffered when radiographs were obtained at bedside, rather than standing. While standing chest x-rays are ideal, the reality is that many patients undergoing imaging for PTX could be in a supine position in a bed, especially in trauma settings.

Previous research on AI systems aimed at detecting PTX has shown promise. However, the authors of this most recent analysis indicate that many prior studies lacked data from trauma settings or patients who have more challenging cases.

“Most published studies focused on specific populations of patients with PTX, with a lower prevalence of emergency setting datasets, acquired in less than ideal conditions, which could potentially lead to worse performances of AI,” corresponding author Francesco Rizzetto, from the Postgraduation School of Diagnostic and Interventional Radiology at the University of Milan in Italy, and colleagues noted. “The aim of our study was to test the performance of an AI software for PTX detection on a further heterogeneous patient cohort, to review its role in a clinical setting, while also assessing potential factors which could influence its diagnostic accuracy, using computed tomography as reference standard.”

For the study, the team retrospectively ran chest X-rays acquired between January 2021 and March 2023 from patients with suspected PTX through the AI system. Each patient included also underwent a chest CT at the time, which was used as the reference standard. The AI’s performance was compared to that of the radiologists who initially interpreted the exams.

The AI system achieved an accuracy of 74%, a sensitivity of 66% and a specificity of 93%. The radiologists’ accuracy was slightly higher, at 77%, while their sensitivity also was higher and specificity lower. Although the performances were quite similar, the AI’s performance wavered more when patients were not ideally positioned.

The system's performance observed in this study was inferior to what it achieved in prior research. The group suggested this is due to the type of exams the software was tasked with reading. Nearly 70% of the exams included in this most recent analysis were acquired bedside from patients in supine positions, meaning the images were also captured in an anteroposterior view, rather than in standing posteroanterior views.

“Supine patients may present with a greater degree of tissue superposition at the lung apices, and with critical disease that requires the presence of medical devices, which in turn may add to such superposition in those zones, thus hindering the potential of the software in detecting small PTX,” the group explained, adding that these cases can be challenging for human radiologists to interpret as well.

The group suggested that, similar to human readers, the AI system simply needs additional training on more challenging datasets. This could pave the way for it to serve as a standalone reader for PTX in the future.

Learn more about the study’s findings here.