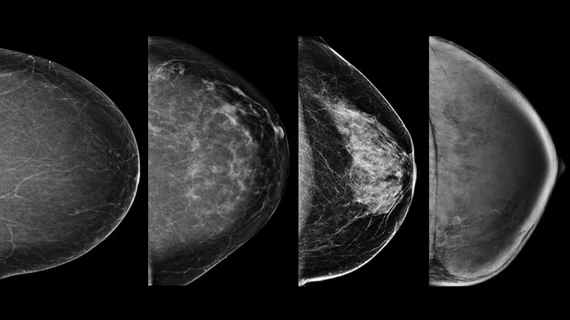

Experts developed a deep learning model that can estimate breast density

Experts at the University of Manchester in the United Kingdom recently developed and trained a deep learning model capable of estimating breast density.

Nearly 160,000 full-field digital mammography images with assigned density values were used to develop a procedure that estimates a density score for each image [1]. When tested, the model achieved a performance comparable to that of human experts, lead researcher Professor Susan M. Astley noted.

“The advantage of the deep learning-based approach is that it enables automatic feature extraction from the data itself,” explained Astley. “This is appealing for breast density estimations since we do not completely understand why subjective expert judgments outperform other methods.”

Instead of building the model from the ground up, the team used two pretrained deep learning models that had been previously trained on a network of more than 1 million images—a process known as transfer learning. This enabled the team to train the new model with less data. The team’s goal was to be able to feed the model a mammogram image as input and have it produce a density score as output.

This involved preprocessing images to make the task less computationally intensive, the experts explained. The deep learning models would then extract features from the processed images and map them out with a set of density scores. The models’ scores were then combined to give a final estimate of breast density.

Not only did the final product offer accurate breast density assessments, but it also required less computation time/resources and memory. This approach could make future model development a less time intensive process that requires significantly less data, the team explained.

“... we have demonstrated that using a transfer learning approach with deep features results in accurate breast density predictions,” the authors wrote. “This approach is computationally fast and cheap, which can enable more analysis to be done and smaller datasets to be used.”

Of note, the two pretrained models used in this work were trained on a nonmedical data set. Model performance would likely show improvement with the inclusion of medical images, the team suggested.

The study is available in the Journal of Medical Imaging.